Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

adracea8957yUnless he's some kind of mad genius , hard to believe , I'd rather believe he's coding in assembly , which is still far from binary .

adracea8957yUnless he's some kind of mad genius , hard to believe , I'd rather believe he's coding in assembly , which is still far from binary . -

A-C-E57217y@adracea *she

A-C-E57217y@adracea *she

According to her, bill gates uses binary regularly....

I'd rather not argue against her claims without concrete evidence... -

adracea8957y@DeveloperACE http://timelessname.com/elfbin/

adracea8957y@DeveloperACE http://timelessname.com/elfbin/

Found this around , and as I said , it's just some kind of smarty pants assembly , machine coding , nothing to fancy like talking to R2-D2 . -

sirjofri5217yBut in fact: it is possible to "code" in binary directly. It is not a good idea to do that with 16bit computers and higher. Your brain will confuse and eventually burn. It is not that big difference from writing in assembly since assembly (ok, the simple forms of it) is just binary code in mnemonics. I was able to do some easy functions in binary, like mov commands and compare/jump. For bigger projects it's really hard to write them in assembly. And bigger projects start with float calculations.

sirjofri5217yBut in fact: it is possible to "code" in binary directly. It is not a good idea to do that with 16bit computers and higher. Your brain will confuse and eventually burn. It is not that big difference from writing in assembly since assembly (ok, the simple forms of it) is just binary code in mnemonics. I was able to do some easy functions in binary, like mov commands and compare/jump. For bigger projects it's really hard to write them in assembly. And bigger projects start with float calculations.

Anyways I can say it's a great feeling to literally fill the ram with zeros and ones and thr computer does something useful. It's the best coding experience I ever had.

If you wanna try it take some easy assembly code and look at the hexdump, most mnemos make sense. And imo hexadecimal is easier to read/write than pure binary. The conversion is an easy thing too. -

sirjofri5217yBtw: the very first computers were mostly programmed in a higher level language on paper. This code was then transferred to some kind of "binary" code. The zuse z3 used film tapes (is that word correct?) with holes in it, iirc.

sirjofri5217yBtw: the very first computers were mostly programmed in a higher level language on paper. This code was then transferred to some kind of "binary" code. The zuse z3 used film tapes (is that word correct?) with holes in it, iirc. -

adracea8957y@sirjofri punch cards more like...but still , while you might feel like the pioneers of the computer , coding in binary could be your go-to for lets say...ARM projects if you don't like embedded C ?

adracea8957y@sirjofri punch cards more like...but still , while you might feel like the pioneers of the computer , coding in binary could be your go-to for lets say...ARM projects if you don't like embedded C ? -

sirjofri5217y@adracea I am far away from being a pioneer. I'm just a 22 years old guy who started with dos/basic and switched to assembly. I have always been interested in in lowlevel programming/kernel development. Today I think I would use assembly embedded in c/c++ programs for speedup/file size and - of course - for coding c64 programs!

sirjofri5217y@adracea I am far away from being a pioneer. I'm just a 22 years old guy who started with dos/basic and switched to assembly. I have always been interested in in lowlevel programming/kernel development. Today I think I would use assembly embedded in c/c++ programs for speedup/file size and - of course - for coding c64 programs! -

sirjofri5217y@DLMousey it's so many years ago and I don't think it would help. See here for yourself: https://github.com/search/...=

sirjofri5217y@DLMousey it's so many years ago and I don't think it would help. See here for yourself: https://github.com/search/...= -

ekyl2867yWell since binary is a value system and not a language that's capable of representing things like ASCII characters, with a character lookup table, you could code ANY language "in binary"

ekyl2867yWell since binary is a value system and not a language that's capable of representing things like ASCII characters, with a character lookup table, you could code ANY language "in binary" -

A-C-E57217y@ekyl I feel like that's maybe where she's getting the binary thing from...

A-C-E57217y@ekyl I feel like that's maybe where she's getting the binary thing from...

I have yet to actually see her writing any code in any language she claims to know so ¯\_(ツ)_/¯ -

You can code binary in MS Paint even, but nobody actually does that, it's just a cheap trick to print a pre-calculated string. Anyone that isn't a developer talking about coding in binary either doesn't understand what they are saying or are thinking "binary" is synonymous with "native" vs interpreted or byte-code.

-

adracea8957yHell if you take it that way you can edit a jpeg file with a hex editor and embed payloads in it and then upload it on a site for a successful payload delivery .

adracea8957yHell if you take it that way you can edit a jpeg file with a hex editor and embed payloads in it and then upload it on a site for a successful payload delivery . -

@adracea Most systems have been well patched against it, but that's been a thing. There's actually a lot of web exploits many devs aren't aware of; it's scary. Luckily browser vendors focus on depreciating features that allow these exploits. Chrome patched against some techniques I used to share with devs to inject debug code to prod, that was a sad week because I had to make browser extensions as a workaround.

-

adracea8957y@Neotelos :) still that doesn't make it binary coding . Yeah , I can use a binary or hex encoding to make some payload deliveries possible but that is just javascript . Actual binary programming is , as discussed above , something you change/write in machine code . Luckily for us nowadays , for big apps we have compiled languages , otherwise it would be pretty nasty writing whole apps the way they did back in the day .

adracea8957y@Neotelos :) still that doesn't make it binary coding . Yeah , I can use a binary or hex encoding to make some payload deliveries possible but that is just javascript . Actual binary programming is , as discussed above , something you change/write in machine code . Luckily for us nowadays , for big apps we have compiled languages , otherwise it would be pretty nasty writing whole apps the way they did back in the day . -

A-C-E57217y@adracea I'm just curious as to the basics of every way you could possibly "code in binary"

A-C-E57217y@adracea I'm just curious as to the basics of every way you could possibly "code in binary"

Related Rants

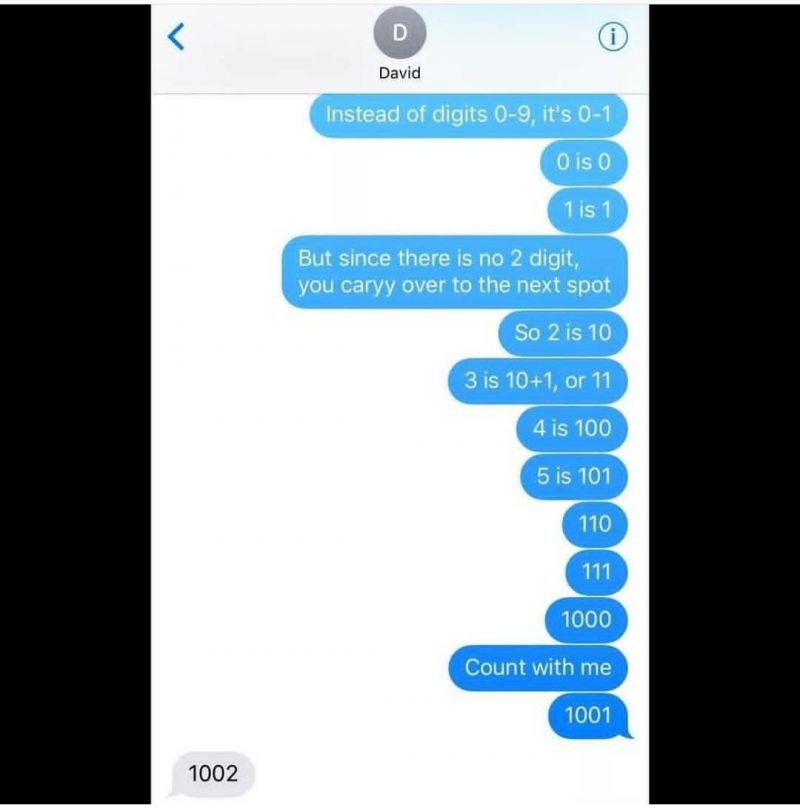

So, my wife sends me this picture because our car had 111,111 miles on it. Of course she called me a nerd when...

So, my wife sends me this picture because our car had 111,111 miles on it. Of course she called me a nerd when... Binary is easy to teach

Binary is easy to teach EDIT: devRant April Fools joke (2018)

-------------------------

Hey everyone! As some of you have already noti...

EDIT: devRant April Fools joke (2018)

-------------------------

Hey everyone! As some of you have already noti...

Code it in binary

(My friend claims to code in binary, is this an actually applicable thing or just a novelty thing to impress people?)

undefined

binary

wk36